How to Succeed with & Scale a Drone Program

Talk about starting an Unmanned Aerial System (UAS) (a/k/a “drone”) program is happening at companies of all sizes, but establishing the right foundations to ensure these kinds of programs don’t fail is far less pervasive. At DJI AirWorks 2018, Kestrel Consultant, Industrial UAS Programs, Rachel Mulholland’s presentation, “Bringing Your Drone Program to Scale: Lessons Learned from Going Big” laid out what it means to achieve success and avoid failure with the technology.

Commercial UAV News recently caught up with Rachel to further discuss her presentation, which focused on UAS applications for infrastructure, explored why UAS programs fail, and detailed the foundations of a successful drone program. In the published interview, she details some of her lessons learned from out in the field, what it means to deal with positive and negative assumptions people have about the technology, why it is so important to have a UAS Program Operations Manual, and much more.

Read the full interview in Commercial UAV News, and learn more about Kestrel’s UAS Program Management services.

Talk Drones with Kestrel at the MWFPA Convention

The Midwest Food Products Association’s Annual Convention brings together leaders in the food processing industry to discuss trends, view new technologies, share expertise, and network with professionals in different companies and disciplines.

MWFPA Annual Convention

November 27-29, 2018

Kalahari Resort | Wisconsin Dells, WI

Kestrel Presentation: Bringing Your Drone Program to Scale

Wednesday, November 28 at 9:30 a.m.

Jack Anderson, Kestrel Management Chief Engineer

This year, Kestrel Management will be talking about the growing applicability of drones and best practices from our experience managing industrial-scale drone programs. Kestrel’s presentation will discuss how unmanned aircraft systems (UAS) technology (a/k/a drones) can be applied across various industries. We will cover some of the risks and opportunities associated with building an industrial drone program and share lessons learned from our experiences. We’ll address common questions, including the following:

- What does it take to build an industrial drone program?

- How can UAS technology fit into your current business model?

- What challenges can occur when you introduce drones to your business operations?

- How do you ensure you operate in compliance with FAA regulations?

Join us at the MWFPA Annual Convention and get the information and resources you need to meet the ongoing challenges of customer demands.

Comments: No Comments

5 Questions to Implement Predictive Analytics

How much data does your company generate associated with your business operations? What do you do with that data? Are you using it to inform your business decisions and, potentially, to avoid future risks?

Predictive Analytics and Safety

Predictive analytics is a field that focuses on analyzing large data sets to identify patterns and predict outcomes to help guide decision-making. Many leading companies currently use predictive analytics to identify marketing and sales opportunities. However, the potential benefits of analyzing data in other areas—particularly safety—are considerable.

For example, safety and incident data can be used to predict when and where incidents are likely to occur. Appropriate data analysis strategies can also identify the key factors that contribute to incident risk, thereby allowing companies to proactively address those factors to avoid future incidents.

Key Questions

Many companies already have large amounts of data they are gathering. The key is figuring out how to use that data intelligently to guide future business decision-making. Here are five key questions to guide you in integrating predictive analytics into your operations.

-

What are you interested in predicting? What risks are you trying to mitigate?

Defining your desired result not only helps to focus the project, it narrows brainstorming of risk factors and data sources. This can—and should—be continually referenced throughout the project to ensure your work is designed appropriately to meet the defined objectives.

-

How do you plan to use the predictions to improve operations? What is your goal of implementing a predictive analytics project?

Thinking beyond the project to overall operational improvements provides bigger picture insights into the business outcomes desired. This helps when identifying the format results should be in to maximize their utility in the field and/or for management. In addition, it helps to ensure that the work focuses on those variables that can be controlled to improve operations. Static variables that can’t be changed mean risks cannot be mitigated.

-

Do you currently collect any data related to this?

Understanding your current data will help determine whether additional data collection efforts are needed. You should be able to describe the characteristics of data you have, if any, for the outcome you want to predict (e.g., digital vs. paper/scanned, quantitative vs. qualitative, accuracy, gathered regularly, precision). The benefits and limitations associated with each of these characteristics will be discussed in a future article.

-

What are some risk factors for the desired outcome? What are some things that may increase or decrease the likelihood that your outcome will happen?

These factors are the variables you will use for prediction in the model. It is valuable to brainstorm with all relevant subject matter experts (i.e., management, operations, engineering, third-parties, as appropriate) to get a complete picture. After brainstorming, narrow risk factors based on availability/quality of data, whether the risk factor can be managed/controlled, and a subjective evaluation of risk factor strength. The modeling process will ultimately suggest which of the risk factors significantly contribute to the outcome.

-

What data do you have, if any, for the risk factors you identified?

Again, you need to understand and be able to describe your current data to determine whether it is sufficient to meet your desired outcomes. Using data that have already been gathered can expedite the modeling process, but only if those data are appropriate in format and content to the process you want to predict. If they aren’t appropriate, using these data in modeling can result in time delays or misleading model results.

The versatility of predictive analytics can be applied to help companies analyze a wide variety of problems when the data and desired project outcomes and business/operational improvements are well-defined. With predictive analytics, companies gain the capacity to:

- Explore and investigate past performance

- Gain the insights needed to turn vast amounts of data into relevant and actionable information

- Create statistically valid models to facilitate data-driven decisions

Comments: No Comments

OSHA Clarifies Position on Incentive Programs

OSHA has issued a memorandum to clarify the agency’s position regarding workplace incentive programs and drug testing. OSHA’s rule prohibiting employer retaliation against employees for reporting work-related injuries or illnesses does not prohibit workplace safety incentive programs or post-incident drug testing. The Department believes that these safety incentive programs and/or post-incident drug testing is done to promote workplace safety and health. Action taken under a safety incentive program or post-incident drug testing policy would violate OSHA’s anti-retaliation rule if the employer took the action to penalize an employee for reporting a work-related injury or illness rather than for the legitimate purpose of promoting workplace safety and health. For more information, see the memorandum

Comments: No Comments

Kestrel to Present at AirWorks 2018

The AirWorks 2018 Conference is focused on the growing commercial drone industry and how developers, partners, and operators can work to reshape the global economy with drones. This year, Kestrel Management will be teaming with Union Pacific Railroad to talk about our experience and lessons learned from managing industrial-scale drone programs.

AirWorks 2018

October 30 – November 1, 2018

Dallas, Texas

Kestrel Presentation: Bringing Your Drone Program to Scale: Lessons Learned from Going Big

Thursday, November 1 at 11:00 a.m.

Rachel Mulholland, Kestrel Management Consultant, Industrial UAS Programs

Edward Adelman, Union Pacific Railroad, General Director of Safety

This presentation will discuss the risks and opportunities associated with building an industrial drone program and share some of the lessons learned from our experience. We’ll discuss common questions, including the following:

- What does it take to build an industrial drone program?

- How can UAS technology fit into your current business model?

- What challenges can occur with fleets of certified remote pilots and unmanned vehicles?

- How do you ensure you operate in compliance with FAA regulations?

- What are some common pitfalls to avoid and best practices to incorporate into your program?

Why You Should Attend

If you currently have a drone program or are looking to implement one, this event is for you!

- Attend sessions focused on the industry track most relevant to your business: construction, energy, agriculture, public safety, infrastructure

- Network with companies that are on the forefront of enterprise drone adoption

- Get a preview of the latest drone technologies

- Receive hands-on training from experienced industry leaders and instructors

USTR Finalizes China 301 List 3 Tariffs

On Monday, September 17, 2018, the Office of the United States Trade Representative (USTR) released a list of approximately $200 billion worth of Chinese imports, including hundreds of chemicals, that will be subject to additional tariffs. The additional tariffs will be effective starting September 24, 2018, and initially will be in the amount of 10 percent. Starting January 1, 2019, the level of the additional tariffs will increase to 25 percent.

In the final list, the administration also removed nearly 300 items, but the Administration did not provide a specific list of products excluded. Included among the products removed from the proposed list are certain consumer electronics products, such as smart watches and Bluetooth devices; certain chemical inputs for manufactured goods, textiles and agriculture; certain health and safety products such as bicycle helmets, and child safety furniture such as car seats and playpens.

Individual companies may want to review the list to determine the status of Harmonized Tariff Schedule (HTS) codes of interest.

NACD Responsible Distribution Cybersecurity Webinar

Join the National Association of Chemical Distributors (NACD) and Kestrel Principal Evan Fitzgerald for a free webinar on Responsible Distribution Code XIII. We will be taking a deeper dive into Code XIII.D., which focuses on cybersecurity and information. Find out ways to protect your company from this constantly evolving threat.

NACD Responsible Distribution Webinar

Code XIII & Cybersecurity Breaches

Thursday, September 20, 2018

12:00 -1:00 p.m. (EDT)

Assessing Risk Management Program Maturity

Maturity assessments are designed to tell an organization where it stands in a defined area and, correspondingly, what it needs to do in the future to improve its systems and processes to meet the organization’s needs and expectations. Maturity assessments expose the strengths and weaknesses within an organization (or a program), and provide a roadmap for ongoing improvements.

Holistic Assessments

A thorough program maturity assessment involves building on a standard gap analysis to conduct a holistic evaluation of the existing program, including data review, interviews with key staff, and functional/field observations and validation.

Based on Kestrel’s experience, evaluating program maturity is best done by measuring the program’s structure and design, as well as the program’s implementation consistency across the organization. For the most part, a program’s design remains relatively unchanging, unless internal modifications are made to the system. Because of this static nature, a “snapshot” provides a reasonable assessment of the design maturity. While the design helps to inform operational effectiveness, the implementation/operational maturity model assesses how completely and consistently the program is functioning throughout the organization (i.e., how the program is designed to work vs. how it is working in practice).

Design Maturity

A design maturity model helps to evaluate strategies and policies, practices and procedures, organization and people, information for decision making, and systems and data according to the following levels of maturity:

- Level 1: Initial (crisis management) – Lack of alignment within the organization; undefined policies, goals, and objectives; poorly defined roles; lack of effective training; erratic program or project performance; lack of standardization in tools.

- Level 2: Repeatable (reactive management) – Limited alignment within the organization; lagging policies and plans; seldom known business impacts of actions; inconsistent company operations across functions; culture not focused on process; ineffective risk management; few useful program or project management and controls tools.

- Level 3: Defined (project management) – Moderate alignment across the organization; consistent plans and policies; formal change management system; somewhat defined and documented processes; moderate role clarity; proactive management for individual projects; standardized status reporting; data integrity may still be questionable.

- Level 4: Managed (program management) – Alignment across organization; consistent plans and policies; goals and objectives are known at all levels; process-oriented culture; formal processes with adequate documentation; strategies and forecasts inform processes; well-understood roles; metrics and controls applied to most processes; audits used for process improvements; good data integrity; programs, processes, and performance reviewed regularly.

- Level 5: Optimized (managing excellence) – Alignment from top to bottom of organization; business forecasts and plans guide activity; company culture is evident across the organization; risk management is structured and proactive; process-centered structure; focus on continuous improvement, training, coaching, mentoring; audits for continual improvement; emphasis on “best-in-class” methods.

A gap analysis can help compare the actual program components against best practice standards, as defined by the organization. At this point, assessment questions and criteria should be specifically tuned to assess the degree to which:

- Hazards and risks are identified, sized, and assessed

- Existing controls are adequate and effective

- Plans are in place to address risks not adequately covered by existing controls

- Plans and controls are resourced and implemented

- Controls are documented and operationalized across applicable functions and work units

- Personnel know and understand the controls and expectations and are engaged in their design and improvement

- Controls are being monitored with appropriate metrics and compliance assurance

- Deficiencies are being addressed by corrective/preventive action

- Processes, controls, and performance are being reviewed by management for continual improvement

- Changed conditions are continually recognized and new risks identified and addressed

Implementation/Operational Maturity

The logical next step in the maturity assessment involves shifting focus from the program’s design to a maturity model that measures how well the program is operationalized, as well as the consistency of implementation across the entire organization. This is a measurement of how effectively the design (program static component) has enabled the desired, consistent practice (program dynamic component) within and across the company.

Under this model, the stage of maturity (i.e., initial, implementation in process, fully functional) is assessed in the following areas:

- Adequacy and effectiveness: demonstration of established processes and procedures with clarity of roles and responsibilities for managing key functions, addressing significant risks, and achieving performance requirements across operations

- Consistency: demonstration that established processes and procedures are fully applied and used across all applicable parts of the organization to achieve performance requirements

- Sustainability: demonstration of an established and ongoing method of review of performance indicators, processes, procedures, and practices in-place for the purpose of identifying and implementing measures to achieve continuing improvement of performance

This approach relies heavily on operational validation and seeking objective evidence of implementation maturity by performing functional and field observations and interviews across a representative sample of operations, including contractors.

Cultural Component

Performance within an organization is the combined result of culture, operational systems/controls, and human performance. Culture involves leadership, shared beliefs, expectations, attitudes, and policy about the desired behavior within a specific company. To some degree, culture alone can drive performance. However, without operational systems and controls, the effects of culture are limited and ultimately will not be sustained. Similarly, operational systems/controls (e.g., management processes, systems, and procedures) can improve performance, but these effects also are limited without the reinforcement of a strong culture. A robust culture with employee engagement, an effective management system, and appropriate and consistent human performance are equally critical.

A culture assessment incorporates an assessment of culture and program implementation status by performing interviews and surveys up, down, and across a representative sample of the company’s operations. Observations of company operations (field/facility/functional) should be done to verify and validate.

A culture assessment should evaluate key attributes of successful programs, including:

- Leadership

- Vision & Values

- Goals, Policies & Initiatives

- Organization & Structure

- Employee Engagement, Behaviors & Communications

- Resource Allocation & Performance Management

- Systems, Standards & Processes

- Metrics & Reporting

- Continually Learning Organization

- Audits & Assurance

Assessment and Evaluation

Data from document review, interviews, surveys, and field observations are then aggregated, analyzed, and evaluated. Identifying program gaps and issues enables a comparison of what must be improved or developed/added to what already exists. This information is often organized into the following categories:

- Policy and strategy refinements

- Process and procedure improvements

- Organizational and resource requirements

- Information for decision making

- Systems and data requirements

- Culture enhancement and development

From this information, it becomes possible to identify recommendations for program improvements. These recommendations should be integrated into a strategic action plan that outlines the long-term program vision, proposed activities, project sequencing, and milestones. The highest priority actions should be identified and planned to establish a foundation for continual improvement, and allow for a more proactive means of managing risks and program performance.

Audit Program Best Practices: Part 2

Audits provide an essential tool for improving and verifying compliance performance. As discussed in Part 1, there are a number of audit program elements and best practices that can help ensure a comprehensive audit program. Here are 12 more tips to put to use:

- Action item closure. Address repeat findings. Identify patterns and seek root cause analysis and sustainable corrections.

- Training. Training should be done throughout the entire organization, across all levels:

- Auditors are trained on both technical matters and program procedures.

- Management is trained on the overall program design, purpose, business impacts of findings, responsibilities, corrections, and improvements.

- Line operations are trained on compliance procedures and company policy/systems.

- Communications. Communications with management should be done routinely to discuss status, needs, performance, program improvements, and business impacts. Communications should be done in business language—with business impacts defined in terms of risks, costs, savings, avoided costs/capital expenditures, benefits. Those accountable for performance need to be provided information as close to “real time” as possible, and the Board of Directors should be informed routinely.

- Leadership philosophy. Senior management should exhibit top-down expectations for program excellence. EHSMS quality excellence goes hand-in-hand with operational and service quality excellence. Learning and continual improvement should be emphasized.

- Roles & responsibilities. Clear roles, responsibilities, and accountabilities need to be established. This includes top management understanding and embracing their roles/responsibilities. Owners of findings/fixes also must be clearly identified.

- Funding for corrective actions. Funding should be allocated to projects based on significance of risk exposure (i.e., systemic/preventive actions receive high priority). The process should incentivize proactive planning and expeditious resolution of significant problem areas and penalize recurrence or back-sliding on performance and lack of timely fixes.

- Performance measurement system. Audit goals and objectives should be nested with the company business goals, key performance objectives, and values. A balanced scorecard can display leading and lagging indicators. Metrics should be quantitative, indicative (not all-inclusive), and tied to their ability to influence. Performance measurements should be communicated and widely understood. Information from auditing (e.g., findings, patterns, trends, comparisons) and the status of corrective actions often are reported on compliance dashboards for management review.

- Degree of business integration. There should be a strong link between programs, procedures, and methods used in a quality management program—EHS activities should operate in patterns similar to core operations rather than as ancillary add-on duties. In addition, EHS should be involved in business planning and MOC. An EHSMS should be well-developed and designed for full business integration, and the audit program should feed critical information into the EHSMS.

- Accountability. Accountability and compensation must be clearly linked at a meaningful level. Use various award/recognition programs to offer incentives to line operations personnel for excellent EHS performance. Make disincentives and disciplinary consequences clear to discourage non-compliant activities.

- Deployment plan & schedule. Best practice combines the use of pilot facility audits, baseline audits (to design programs), tiered audits, and a continuous improvement model. Facility profiles are developed for all top priority facilities, including operational and EHS characteristics and regulatory and other requirements.

- Relation of audit program to EHSMS design & improvement objectives. The audit program should be fully interrelated with the EHSMS and feed critical information on systemic needs into the EHSMS design and review process. It addresses the “Evaluation of Compliance” element under EHSMS international standards (e.g., ISO 14001 and OHSAS 18001). Audit baseline helps identify common causes, systemic issues, and needed programs. The EHSMS addresses root causes and defines/improves preventive systems and helps integrate EHS with core operations. Audits further evaluate and confirm performance of EHSMS and guide continuous improvement.

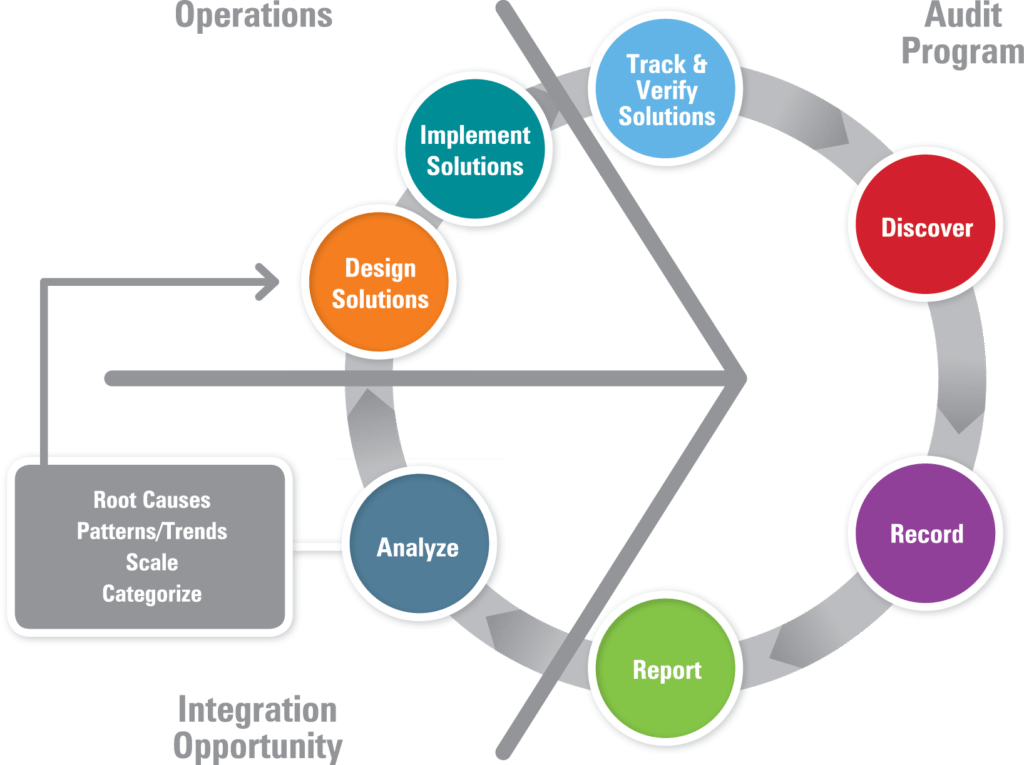

- Relation to best practices. Inventory best practices and share/transfer them as part of audit program results. Use best-in-class facilities as models and “problem sites” for improvement planning and training. The figure below illustrates an audit program that goes beyond the traditional “find it, fix it, find it, fix it” repetitive cycle to one that yields real understanding of root causes and patterns. In this model, if the issues can be categorized and are of wide scale, the design of solutions can lead to company-wide corrective and preventive measures. This same method can be used to capture and transfer best practices across the organization. They are sustained through the continual review and improvement cycle of an EHSMS and are verified by future audits.

Read the part 1 audit program best practices.

Audit Program Best Practices: Part 1

Audits provide an essential tool for improving and verifying compliance performance. Audits may be used to capture regulatory compliance status, management system conformance, adequacy of internal controls, potential risks, and best practices. An audit is typically part of a broader compliance assurance program and can cover some or all of the company’s legal obligations, policies, programs, and objectives.

Companies come in a variety of sizes with a range of different needs, so auditing standards remain fairly flexible. There are, however, a number of audit program elements and best practices that can help ensure a comprehensive audit program:

- Goals. Establishing goals enables recognition of broader issues and can lead to long-term preventive programs. This process allows the organization to get at the causes and focus on important systemic issues. It pushes and guides toward continuous improvement. Goal-setting further addresses the responsibilities and obligations of the Board of Directors for audit and oversight and elicits support from stakeholders.

- Scope. The scope of the audit should be limited initially (e.g., compliance and risk) to what is manageable and to what can be done very well, thereby producing performance improvement and a wider understanding and acceptance of objectives. As the program is developed and matures (e.g., Management Systems, company policy, operational integration), it can be expanded and, eventually, shift over time toward systems in place, prevention, efficiency, and best practices.

- Committed resources. Sufficient resources must be provided for staffing and training and then applied, as needed, to encourage a robust auditing program. Resources also should be applied to EHSMS design and continuous improvement. It is important to track the costs/benefits to compare the impacts and results of program improvements.

- Operational focus. All facilities need to be covered at the appropriate level, with emphasis based on potential EHS and business risks. The operational units/practices with the greatest risk should receive the greatest attention (e.g., the 80/20 Rule). Vendors/contractors and related operations that pose risks must be included as part of the program. For smaller, less complex and/or lower risk facilities, lower intensity focus can be justified. For example, relying more heavily on self-assessment and reporting of compliance and less on independent audits may provide better return on investment of assessment resources.

- Audit team. A significant portion of the audit program should be conducted by knowledgeable auditors (independent insiders, third parties, or a combination thereof) with clear independence from the operations being audited and from the direct chain of command. For organizational learning and to leverage compliance standards across facilities, it is good practice to vary at least one audit team member for each audit. Companies often enlist personnel from different facilities and with different expertise to audit other facilities. Periodic third-party audits further bring outside perspective and reduce tendencies toward “home-blindness”.

- Audit frequency. There are several levels of audit frequency, depending on the type of audit:

- Frequent: Operational (e.g., inspections, housekeeping, maintenance) – done as part of routine EHSMS day-to-day operational responsibilities

- Periodic: Compliance, systems, actions/projects – conducted annually/semi-annually

- As needed: For issue follow-up

- Infrequent: Comprehensive, independent – conducted every three to four years

- Differentiation methods. Differentiating identifies and distinguishes issues of greatest importance in terms of risk reduction and business performance improvement. The process for differentiating should be as clear and simple as possible; a system of priority rating and ranking is widely understood and agreed. The rating system can address severity levels, as well as probability levels, in addition to complexity/difficulty and length of time required for corrective actions.

- Legal protection. Attorney privilege for audit processes and reports is advisable where risk/liability are deemed significant, especially for third-party independent audits. To the extent possible, make the audit process and reports become management tools that guide continuous improvement. Organizations should follow due diligence elements of the USEPA audit policy.

- Procedures. Describe and document the audit process for consistent, efficient, effective, and reliable application. The best way to do this is to involve both auditors and those being audited in the procedure design. Audit procedures should be tailored to the specific facility/operation being audited. Documented procedures should be used to train both auditors and those accountable for operations being audited. Procedures can be launched using a pilot facility approach to allow for initial testing and fine-tuning. Keep procedures current and continually improve them based on practical application. Audits include document and record review (corporate and facility), interviews, and observations.

- Protocols & tools. Develop specific and targeted protocols that are tailored to operational characteristics and based on applicable regulations and requirements for the facility. Use “widely accepted or standard practice” as go-by tools to aid in developing protocols (e.g., ASTM site assessment standards; ISO 14010 audit guidance; audit protocols based on EPA, OSHA, MSHA, Canadian regulatory requirements; GEMI self-assessment tools; proprietary audit protocol/tools). As protocols are updated, the ability to evaluate continuous improvement trends must be maintained (i.e., trend analysis).

- Information management & analysis. Procedures should be well-defined, clear, and consistent to enable the organization to analyze trends, identify systemic causes, and pinpoint recurring problem areas. Analysis should prompt communication of issues and differentiation among findings based on significance. Audit reports should be issued in a predictable and timely manner. It is desirable to orient the audit program toward organizational learning and continual improvement, rather than a “gotcha” philosophy. “Open book” approaches help learning by letting facility managers know in advance what the audit protocols are and how the audits will be conducted.

- Verification & corrective action. Corrective actions require corporate review, top management-level attention and management accountability for timely completion. A robust root cause analysis helps to ensure not just correction/containment of the existing issue, but also preventive action to assure controls are in place to prevent the event from recurring. For example, if a drum is labeled incorrectly, the corrective action is to relabel that drum. A robust plan should also look for other drums than might be labeled incorrectly and to add and communicate an effective preventive action (e.g., training or posting signs showing a correctly labeled drum).

Read the part 2 audit program best practices.